In my previous post I have

explained about how to generate a single ROC Curve for single classifier, but

in the practical cases you will need to generate Multiple ROC Curve for

multiple numbers of classifiers. This is very important to evaluate the performance

of the classifiers. The following steps will gives you the complete idea of how

to draw Multiple ROC Curve for multiple numbers of classifiers.

Now all the components need to draw the ROC are on the

layout, next thing is to do the connections.

|

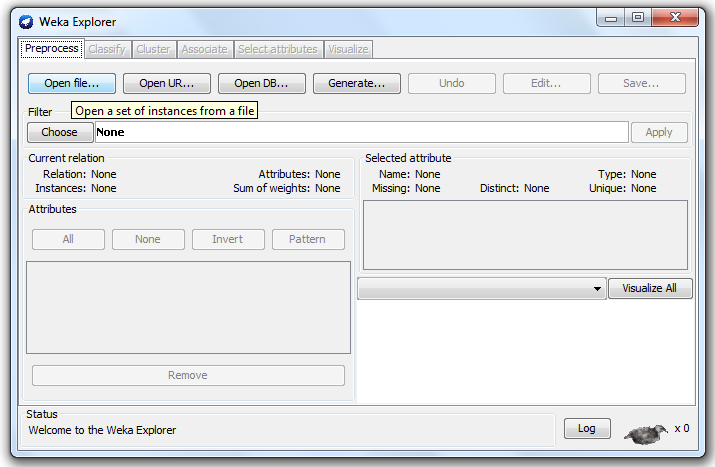

| Figure 2 |

- When it is loaded, the first thing is to select ArffLoader from the DataSources menu, which is used to input the data. Drag ArffLoader into the knowledge flow layout as shown in figure 3.

- From the evaluation tab select the ClassAssigner and put it into the knowledge flow layout.

- From the evaluation tab select the ClassValuePicker and put it into the knowledge flow layout.

- From the evaluation tab select the CrossValidationFoldMaker (we have using 10 fold cross validation) and put it into the knowledge flow layout.

- Next step is to choose the classifiers from classifiers tab, in this tutorial I am using Random Forest (RF) and Naïve Bayes as classifiers. Select RF from trees tab and Naïve Bayes from bayes tab.

- We are going perform the performance of the classifiers, for that from the evaluation tab select the ClassifierPerformanceEvaluator(we need two performance evaluator one for each classifier) ) and put it into the knowledge flow layout.

- Finally we have to plot the ROC Curve, for that from the visualization tab select ModelPerformanceChart and put it into the knowledge flow layout.

|

| Figure 3 |

- To connect ArffLoader with the ClassAssigner , right click on the ArffLoader and select the data set and connect it to the ClassAssigner

|

| Figure 4 |

- Right click on the ClassAssigner and select the dataset, connect it to ClassValuePicker.

- Right click on the ClassValuePicker and select the dataset, connect it to. CrossValidationFoldMaker.

- Next we have to assign the training and test set data to the classifier algorithms

- Right click on the CrossValidationFoldMaker and select the training data, connect it to RF classifier. Right click on the CrossValidationFoldMaker and select the testing data, connect it to RF classifier.Similarly do the same for Naïve Bayes classifier also.

- Right click on the RF Classifier and select the batchClassifier, connect it to ClassifierPerformanceEvaluator. Do the same for the Naïve Bayes classifier also.

- Right click on the ClassifierPerformanceEvaluator and select the thresholdData, connect it to ModelPerformanceChart. Now the total arrangement looks like figure 4.

|

| Figure 5 |

- Next we are going to input the data, for that right clicks on the ArffLoader and select configure. Browse for the arrf file in your system, click ok button.

- Right click on the ClassValuePicker and selects for which class we going to draw the ROC Curve.

- Right click on the CrossValidationFoldMaker and selects how many folds we are using (default will be 10 fold cross validation) for selecting the training and testing data. Ten fold cross validation means from the input 90% data are used as training data and remaining 10% used as the testing data.

- Next we have to run the model for that right clicks on the ArffLoader and selects start loading

|

| Figure 6 |

To see the ROC Curve Right click the ModelPerformanceChart, and select show chart. The result will be

look like in the figure 7.

|

| Figure 7 |

|

| Figure 8 |